With the ever growing fierce competition, mastering technical SEO should be one of the top activities of any business looking to improve its website performance. Technical SEO is a specific type of SEO that focuses on the behind-the-scenes aspects of your website. It ensures that search engines can easily crawl and index your content, which is essential for improving your visibility in search results.

The real essence of technical search engine optimization is to please your visitors. Make it easier for them to navigate around your website, make it as easy as possible to access the messages you want to communicate.

One question! Would you wait forever for a website to load or you would hit the “back button” to navigate to another website that loads faster?

Another question! How frustrated would you be after you think you have found what you were looking for and click on the link only to be faced with “Ops! The page you are looking for does not exist!”

All these instances are examples of bad user experience which Google does not approve of. On the contrary though, if your website is user friendly and offers excellent user experience, Google will reward your website by allocating higher priority to it over your competitors’. The “Magic word” as far as technical search engine optimization is concerned is Users First! Period! I can categorically say that it is non-negotiable!

Now, I want you to understand that technical SEO isn’t just about pleasing search engines: — it is also about enhancing the user experience. When your website is optimized from a technical standpoint, it loads faster, functions smoothly, and provides a seamless experience for visitors. This, in turn, can lead to higher rankings on search engine results pages (SERPs) and more organic traffic to your site.

Table of Contents

- 1 The Key Takeaway Here

- 2 Understanding Technical SEO

- 3 1. Ensuring Crawlability and Indexing Optimization

- 4 2. Enhancing Site Speed for Better User Experience and Rankings

- 5 3. Mobile Optimization: Adapting to the Mobile-First Era

- 6 4. Using Structured Data for Better Search Visibility

- 7 5. Utilizing XML Sitemaps and Robots.txt Files Effectively

- 8 6. Managing Errors and Redirects for Seamless User Experience

- 9 Advanced Technical SEO Strategies You Should Know About

The Key Takeaway Here

Key Takeaway: If you implement the effective technical SEO strategies I will outline here, you will be knowledgeable enough to significantly enhance your website’s visibility in search engine results and provide a superior user experience. It is more than just optimizing for keywords, it is about creating a seamless interaction between your site and both users and search engines.

This guide, titled “The Ultimate Guide to Technical SEO: Everything You Need to Know,” I will walk you through the essential components of technical SEO. Here’s what you’ll learn:

- Understanding Technical SEO: A deep dive into the basics of technical SEO and its critical role in an overall SEO strategy.

- Ensuring Crawlability and Indexing Optimization: Techniques to ensure efficient crawling and indexing by search engines.

- Enhancing Site Speed: How site speed influences user experience and rankings, with actionable steps to improve it.

- Mobile Optimization: Adapting your website for the mobile-first era through responsive design.

- Leveraging Structured Data: Using schema markup to enhance search visibility.

- Utilizing XML Sitemaps and Robots.txt Files: Guiding crawlers effectively through your site’s structure.

- Using LLMs.txt files: Making it easier for AI systems to understand your content.

- Managing Errors and Redirects: Maintaining seamless user experience while managing site changes.

Embark on this journey with me and you will be equipped with a comprehensive technical SEO list of best practices, empowering you to execute technical SEO activities that drive tangible results for your business. Whether you’re focused on enhancing local business visibility or offering nationwide remote SEO services, understanding these fundamentals is key to outperforming competitors in the digital arena.

Understanding Technical SEO

Technical SEO is a crucial part of any effective SEO strategy. It serves as the foundation for both on-page and off-page optimization efforts. By focusing on the technical aspects of your website, you can ensure that search engines can easily crawl, index, and understand your content. This not only leads to better visibility in search engine results but also improves the overall user experience.

Key Components of Technical SEO

Here are the key components that are essential for optimizing your site’s performance through technical SEO:

- Crawlability: This ensures that search engines can access and navigate your site’s pages efficiently. To achieve this, you need to create an SEO-friendly site structure with clear navigation paths and effective internal linking.

- Indexing: Indexing refers to how search engines store and organize the information they gather. Proper indexing is vital for your pages to appear in relevant search results.

- Site Speed: Site speed impacts both user experience and search rankings. Techniques like file compression and using a Content Delivery Network (CDN) can significantly enhance page load times.

- Mobile Optimization: With mobile devices dominating internet usage, ensuring your website is mobile-friendly is crucial. Implementing responsive design principles helps cater to this need.

- Structured Data: Employing schema markup provides search engines with detailed context about your content, leading to enhanced visibility in SERPs.

- XML Sitemaps: These act as a roadmap for crawlers, guiding them through your site’s structure to ensure comprehensive crawling and indexing.

- Robots.txt Files: Manage crawler behavior by specifying which parts of your website should or shouldn’t be crawled by search engines.

- LLMs.txt files: Designed to guide large language models (LLMs) on how they can interact with and use your website’s content. It sets rules for AI crawlers. This helps businesses protect sensitive information, control data usage, and ensure their content is represented accurately in AI-driven search results.

Understanding these components and how they work together is essential for conducting thorough technical SEO analysis — whether you’re a business owner looking to drive organic traffic or a freelancer focusing on advanced technical SEO strategies.

There are tools which can assist you in identifying areas for improvement and implementing best practices effectively. The right technical optimizations lay the groundwork for successful on-page SEO efforts, ultimately driving higher rankings and better online visibility.

1. Ensuring Crawlability and Indexing Optimization

Creating an SEO-friendly site structure is the foundation for effective crawlability. Search engines deploy bots, often referred to as spiders, to crawl websites. These bots follow links across pages to gather data. A well-organized site structure ensures that these crawlers can efficiently navigate through your content, indexing it accurately for search engine results.

Note: If search engines can’t crawl your website, you might as well forget ranking for anything and forget any traffic unless you are ready to pay for it.

Internal Linking and Crawlability

Image: Thanks to Moz.com

Internal linking is the practice of connecting different pages within the same website through hyperlinks. This intricate network not only enhances navigation but it is also significant in optimizing a site’s crawlability. When search engine spiders traverse your URL structure, they rely on internal links to discover and index content efficiently. A well-structured pattern of links ensures that these digital crawlers seamlessly navigate from one page to another, ultimately improving indexation and visibility in search results.

Internal linking fosters an improved user experience by guiding visitors effortlessly through related topics and resources on your website. By strategically placing contextual links, users are encouraged to explore deeper aspects of your content, increasing their time spent on site and potentially boosting conversion rates. Successful internal linking requires striking a balance between assisting search engine spiders with an organized URL structure while simultaneously enriching the browsing journey for users seeking valuable information or products.

A sophisticated approach sees search engine optimization professionals who are employing anchor texts that precisely reflect target keywords to further assist search engines in understanding page context and relevance. Beyond technical benefits, this finesse in link placement communicates trustworthiness and authority to both users and algorithms alike, forging stronger engagement pathways throughout your digital domain. Internal linking is thus much more than simple connectivity; it’s about orchestrating a cohesive ecosystem where crawlability dovetails seamlessly with user satisfaction.

Attention: Internal linking is a powerful instrument when employing the Pillar – Cluster strategy in content optimization. While working for a digital company, I implemented this method on our clients’ websites. The results were increase in ranking keywords with many of them hitting the first page of Google for multiple key phrases leading to spikes in organic traffic. That is just one of the powers of linking pages of related content together.

Attention: Internal linking is a powerful instrument when employing the Pillar – Cluster strategy in content optimization. While working for a digital company, I implemented this method on our clients’ websites. The results were increase in ranking keywords with many of them hitting the first page of Google for multiple key phrases leading to spikes in organic traffic. That is just one of the powers of linking pages of related content together.

The Search Engine Indexing Process

Once crawlers have accessed your site, they proceed with indexing the content. This involves analyzing and storing information in the search engine’s database, making it retrievable when users perform searches. Optimizing your site’s content for indexing means ensuring that all valuable pages are accessible and properly tagged.

Managing Duplicate Content with Canonical Tags

Duplicate content can lead to confusion for both users and search engines, potentially harming your site’s rankings. Canonical tags come into play here by specifying the preferred version of a web page when multiple versions exist. This helps direct search engine bots to index the correct page, avoiding dilution of page authority.

Utilizing Noindex Tags

In some cases, certain pages on your site may not need to appear in search results. Implementing noindex tags tells search engines not to include specific pages in their index. This can be particularly useful for administrative pages or duplicate content that serves no purpose in search visibility.

By focusing on these aspects of crawlability and indexing optimization, you lay the groundwork for improved search engine performance and user engagement. As the landscape of SEO continues to evolve, maintaining an agile approach towards technical practices becomes pivotal in sustaining a competitive edge.

2. Enhancing Site Speed for Better User Experience and Rankings

The speed at which a website loads is the first significant user experience factor. As I mentioned earlier, nobody wants to spend minutes while waiting for a web page to load. The time it takes for a webpage to load is important not only for keeping users happy but also for determining how well the site ranks on search engines. Fast-loading sites are favored by search engines like Google because they offer a better experience for users, which can directly impact rankings.

Site speed is a critical factor in both user experience and search engine rankings that is often underestimated in its influence on a website’s success. Lightning-fast load times not only enhance user satisfaction but are also pivotal for keeping potential customers engaged. A study from Google suggests that if a page takes longer than three seconds to load, more than half of mobile users will abandon the site altogether. This highlights how crucial even marginal speed improvements can be for retaining traffic and reducing bounce rates.

Page Speed Also Improves SEO Performance of a Website

Beyond merely satisfying visitors, enhancing site speed can also significantly impact your site’s SEO performance. Search engines like Google prioritize websites with faster load times, which serve as an indirect signal of site quality and credibility. By optimizing your site’s speed through strategies like compressing images, leveraging browser caching, and minimizing server response time, you’re not just creating a seamless experience; you’re fostering increased visibility across search engine results pages (SERPs). Investing in these optimizations could lead to higher conversion rates and improved visitor retention—an essential edge in today’s fast-paced digital marketplace.

Thus, viewing site speed as an ongoing commitment rather than a one-off task encourages continuous improvement aligned with evolving technology standards. Incorporating tools that offer real-time analytics can help you identify areas for enhancement promptly while benchmarking against industry peers offers insights into competitive advantages. Emphasizing efficiency conveys to users that you value their time—a subtle yet powerful message that enhances brand loyalty and sustains engagement long-term.

Factors That Affect Site Speed

There are several factors to consider when it comes to improving website speed. Here is a summary of some of the most significant ones..

- Server Speed: The speed of your website depends of your hosting provider. Even with modern-day advanced technology, there are some providers who still host websites on old and slow servers. Therefore, don’t opt for cheap hosting platforms.

- File Compression Techniques: Large files can slow down your website, so it’s important to compress them. Tools like Gzip can significantly reduce the size of your CSS, HTML, and JavaScript files, making them quicker to load.

- Content Delivery Network (CDN): A CDN is a network of servers located in different parts of the world. By using a CDN, you can deliver your website’s content from the server that is closest to each individual user. This helps reduce latency and speeds up page load times.

The Impact of Site Speed on User Experience and Business Growth

A slow website can frustrate users and lead to higher bounce rates, meaning more people leave your site without taking any action. This can result in missed opportunities for conversions and sales. In fact, studies show that even a one-second delay in page response can lead to a 7% decrease in conversions.

Search engines understand the importance of site speed as well. Websites that load quickly are more likely to rank higher in search engine results pages (SERPs). This makes optimizing your site’s speed an essential part of improving its visibility online.

As you work on refining your technical SEO strategies, remember to prioritize reducing your webpage’s load time. By doing so, you’ll not only enhance the experience for your users but also increase the chances of your site appearing at the top of search results.

There are many tools by which you can monitor or check the speed of an entire page (which takes a very long time if your website is very large) or on a page by page basis. My favorite is Pagespeed Insights because Google suggests it when you want to check the Google Core Web Vitals in your Search Console Dashboard. Since it is a Google recommendation, I would do the same. Use the tool to check and monitor page speeds of all relevant pages of your website.

There are many tools by which you can monitor or check the speed of an entire page (which takes a very long time if your website is very large) or on a page by page basis. My favorite is Pagespeed Insights because Google suggests it when you want to check the Google Core Web Vitals in your Search Console Dashboard. Since it is a Google recommendation, I would do the same. Use the tool to check and monitor page speeds of all relevant pages of your website.

3. Mobile Optimization: Adapting to the Mobile-First Era

Since the advent cell phones, tablets, lap tops and other mobile devices, it has become a necessity to build websites that can be viewed comfortably on all these devices.

Therefore, mobile optimization is extremely important. Search engines like Google prioritize websites that are mobile-friendly due to their mobile-first indexing approach. To maintain and improve search engine rankings, it is crucial to ensure that your website offers a smooth experience on all devices.

A key aspect of this process is implementing a mobile-friendly design. This means using responsive web design principles that allow your site to adapt seamlessly to different screen sizes and orientations. With a responsive design, elements such as images, text, and navigation menus automatically adjust to provide an optimized view whether accessed on a smartphone, tablet, or desktop.

Techniques for Mobile Optimization

- Responsive Web Design: Use CSS media queries to create flexible layouts that adjust based on device dimensions.

- Viewport Meta Tag: Include this tag in your HTML to control layout adjustments and scaling on various devices.

- Mobile-Friendly Navigation: Simplify menus and use touch-friendly buttons for better accessibility.

- Optimize Images and Media: Implement lazy loading techniques and serve appropriately sized images for different devices to save bandwidth and decrease load times.

By implementing these techniques, you can ensure that your site remains competitive in a mobile-dominated market. Focusing on mobile optimization not only improves user experience but also aligns your website with search engine expectations, ultimately enhancing your site’s performance in search results.

Mobile First Optimization of Websites Affect Conversion Rate!

A few years ago, I was charged with the task of finding out why a website was getting a lot, and I mean a lot of traffic but the conversion rate was extremely low (around 2%). The tool we were using to monitor showed that the majority of the traffic were coming from mobile devices. I checked the landing pages on my personal cellphone and discovered that the Call-To-Action buttons were out of focus. I suggested a redesign of the website to comply with mobile devices. As a result of that, conversion rate jumped to a whopping 31% a week or two after the redesign. Yes! Mobile optimization does affect conversion rate which also translates to revenue.

A few years ago, I was charged with the task of finding out why a website was getting a lot, and I mean a lot of traffic but the conversion rate was extremely low (around 2%). The tool we were using to monitor showed that the majority of the traffic were coming from mobile devices. I checked the landing pages on my personal cellphone and discovered that the Call-To-Action buttons were out of focus. I suggested a redesign of the website to comply with mobile devices. As a result of that, conversion rate jumped to a whopping 31% a week or two after the redesign. Yes! Mobile optimization does affect conversion rate which also translates to revenue.

4. Using Structured Data for Better Search Visibility

Image: Courtesy of LinkedIn.

The use of structured data is a powerful way to improve search visibility and performance. Schema markup, a type of microdata, allows website owners to provide search engines with more specific information about their content. This extra information can change how your web pages appear in search engine results pages (SERPs), possibly showing rich snippets that get more clicks.

I personally like to consider Structured Data as an advancement in the way information is communicated to search engines and a way to provide a richer context beyond mere keywords. By embedding structured data into a website, the action enables search engines to better understand the content’s intent and relationships in a more intricate way. This enhanced comprehension allows for more precise indexing and ranking, leading to improved visibility in organic search results. When implemented effectively, structured data can elevate a site’s listings into rich snippets or other prominent features like knowledge panels or carousels—assets that capture users’ attention immediately.

The strategic use of structured data aligns with an evolving focus on semantic search and user experience improvements. For businesses aiming for long-term digital success, it’s not just about being present but ensuring their presence resonates accurately with users’ queries and needs. When you leverage schema markup that is customized specifically to your industry, whether it is e-commerce products, local business details, or event information, your website can stand out significantly from competitors who fall short of utilizing these tactic. I would also assert that voice-assisted searches are growing and increasingly widespread. Therefore, including a well-structured shema data into your website becomes even more critical in delivering concise and relevant responses seamlessly.

Schema Structured Data As a Must Tool for Digital Marketing

Marketing professionals should recognize that structured data is not a static solution but an ongoing dialogue between web entities and search algorithms. Constantly revisiting and refining this approach guarantees alignment with ever-evolving SEO guidelines while fostering innovation on one’s site architecture side. This proactive stance ensures not only enhanced visibility but also reinforces brand credibility through authoritative representation across digital realms. Embracing structured data isn’t merely about maintaining relevancy; it fundamentally transforms how brands interact within the digital ecosystem.

Benefits of Schema Markup

- Better Search Appearance: By using schema markup, web pages can show rich snippets like star ratings, product prices, and event details. These enhancements can increase the CTR (Click-Through Rate) as users are more likely to click on results that provide immediate answers or engaging visuals.

- Improved Understanding by Search Engines: Schema markup helps search engines understand the content better by categorizing it under specific types such as articles, recipes, or events. A powerful SEO strategy for small businesses to stand out from the competition. This improved understanding can lead to better indexing and ranking in relevant searches.

- Optimizing for Voice Search: With the rise of voice-activated devices, using structured data can cater to voice search queries that often require concise and precise answers. Schema helps in delivering these targeted responses effectively.

For businesses looking to increase organic traffic and rank for multiple keywords, adding schema markup is an important strategy in improving the overall technical SEO framework. By providing more detailed information through structured data, websites not only become more visible but also gain trust from both users and search engines.

A Mini Case Study!

A client’s website in the manufacturing industry. There was this particular commercial intent keyword with an average search volume of 3000. The site was struggling between positions 20 and 15 on Google SERPs due to fierce competition. I was bent on getting to the first page on Google. After implementing the “Striking Distance Strategy” and strategic Schema Structured Data. We finally made it to the first page and appeared on the Knowledge Base Panel with an image of the product and a snippet of description. The result: A drastic explosion of Click-Through-Rate (CTR) and of course a corresponding increase in conversions. I believe that it will be a digital marketing “crime” not to implement these strategies on important pages of a website.

A client’s website in the manufacturing industry. There was this particular commercial intent keyword with an average search volume of 3000. The site was struggling between positions 20 and 15 on Google SERPs due to fierce competition. I was bent on getting to the first page on Google. After implementing the “Striking Distance Strategy” and strategic Schema Structured Data. We finally made it to the first page and appeared on the Knowledge Base Panel with an image of the product and a snippet of description. The result: A drastic explosion of Click-Through-Rate (CTR) and of course a corresponding increase in conversions. I believe that it will be a digital marketing “crime” not to implement these strategies on important pages of a website.

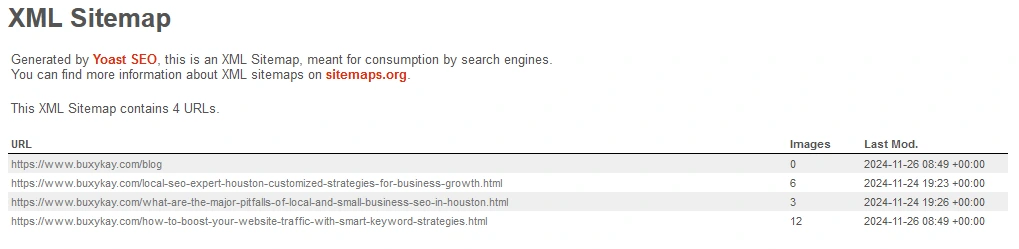

5. Utilizing XML Sitemaps and Robots.txt Files Effectively

To maximize a website’s potential for search engine visibility, integrating XML sitemaps and robots.txt files is a necessity. An elegant design and user-friendly interface can attract visitors; however, the behind-the-scenes architecture must cater to search engines. XML sitemaps serve as meticulous blueprints that outline the terrain of your site for crawlers, ensuring every nook and corner of content receives appropriate attention. By systematically listing pages in a structured way, these sitemaps offer crawlers direct access to important URLs, thereby enhancing indexing performance—a critical factor when aiming for top search engine result placements.

Simultaneously, the strategic creation of robots.txt files acts as a gatekeeper within this navigational framework. Rather than opening doors indiscriminately, such files convey clear instructions about which sections should remain private or be omitted from indexing altogether. This selective visibility helps emphasize priority content while safeguarding sensitive information — thus streamlining crawler behavior to favor optimal paths without redundancy or error-trapping delays. Together, XML sitemaps and robots.txt files strike a symbiotic balance between openness and control, promoting not just extensive coverage but also refined precision in how web pages communicate with search engine crawlers.

In today’s digital landscape where competition is relentless and algorithms ever-evolving, leveraging these tools is non-negotiable for any business serious about its online presence. When executed thoughtfully within a holistic SEO strategy, they’re more than just technical necessities—they’re pivotal elements in sculpting an agile yet robust foundation poised for growth amidst shifting digital currents. With well-optimized XML structures guiding through expansive ecosystems alongside disciplined path restrictions set via robots.txt directives—websites are empowered to navigate complex terrains efficiently while maintaining focus on delivering peak value both to users and robotic explorers alike.

XML Sitemaps: Your Website’s Blueprint

XML sitemaps act as a roadmap for search engines, highlighting the most important pages of your site. By submitting an XML sitemap to search engines, you can:

- Guide crawlers through your website’s architecture.

- Ensure that all critical pages are discovered and indexed.

- Provide metadata about URLs, such as the last update date and change frequency.

For sites with complex structures or extensive content, XML sitemaps are indispensable in maintaining comprehensive indexing.

Robots.txt Files: Controlling Crawl Access

The robots.txt file is a powerful tool for managing crawler access to certain parts of your site. By specifying which areas should be excluded from crawling, it helps:

- Prevent indexing of duplicate or non-essential pages.

- Protect sensitive information from being inadvertently exposed.

- Optimize crawl budget by directing bots towards high-value content.

When used judiciously, robots.txt files enhance technical SEO efforts by fine-tuning crawler interaction with your site.

6. Managing Errors and Redirects for Seamless User Experience

Encountering 404 errors can be a jarring experience for users, often leaving them disoriented and inclined to abandon your site in search of more reliable alternatives. The disruption doesn’t just end with the user; it reverberates through your site’s overall performance, casting shadows over your carefully curated digital presence. These broken links serve as unwelcome detours that stall the seamless user journey you intend to offer, leading visitors astray from key content and potential conversions. To mitigate this risk, it’s crucial to regularly audit and update your links, ensuring all paths lead exactly where you intend them to go.

From an SEO perspective, 404 errors are more than mere inconveniences — they’re red flags signaling subpar website maintenance to search engines. As algorithms become increasingly sophisticated, they prioritize websites that consistently provide value and smooth user experiences. A proliferation of broken links can unjustly categorize your site as unreliable or neglected, which in turn leads to dwindling visibility and lower rankings on search engine results pages (SERPs). Proactively monitoring for these errors not only preserves the integrity of your site’s architecture but also reinforces its credibility in the eyes of both users and algorithms alike.

Embedding user-friendly strategies like a custom 404 error page can transform potentially negative experiences into opportunities for engagement. Instead of presenting visitors with a blunt dead-end message, consider designing a helpful page offering navigational guidance or even suggesting related content based on their initial intent. This approach not only cushions the impact of landing on an unexpected error but also exemplifies hospitality—hinting at what lies beyond immediate friction is worth exploring further.

Consequences of Broken Links:

- User Experience: Users encountering dead ends may leave your site, increasing bounce rates.

- SEO Performance: Search engine crawlers struggle to index pages efficiently, impacting search visibility.

To address these challenges, implementing effective redirects is essential when URLs are altered or removed. Redirects help retain the value of backlinks and maintain link equity, ensuring search engines and users are seamlessly guided to relevant content.

Best Practices for Implementing Redirects:

- 301 Redirects: Use these for permanent URL changes to signal search engines about the new location of a page, preserving link equity.

- 302 Redirects: Suitable for temporary changes where the original URL is expected to return.

- Audit Regularly: Regularly check for broken links using tools like Google Search Console and address them promptly.

- Update Internal Links: Ensure all internal links point to the updated URLs to avoid unnecessary redirects.

By managing errors proactively and implementing strategic redirects, you enhance user satisfaction while safeguarding your site’s SEO integrity. This approach fosters a smooth navigation experience, reflecting positively on both users and search engines.

Advanced Technical SEO Strategies You Should Know About

For those looking to push the boundaries of their website’s performance, delving into advanced technical SEO practices is essential. These strategies go beyond the basics, offering a pathway for seasoned professionals to refine and perfect their digital presence.

The task of tinkering with advanced technical SEO will very much depend on a robust technical coding background. There is a lot of code to deal with here!

1. JavaScript SEO

Understanding how search engines interact with JavaScript-driven content is crucial. By optimizing how your JavaScript applications are rendered and indexed, you can ensure all content is accessible to both users and search engines.

2. Log File Analysis

Analyzing server log files provides insights into how search engines crawl your site. This data reveals patterns and potential issues in the crawling process, allowing proactive optimizations.

3. Edge SEO

Utilizing serverless technologies to deploy changes directly at the edge can enhance site speed and performance without altering backend code. This approach allows for rapid SEO adjustments in response to market changes.

These technical components of SEO emphasize continuous improvement and innovation, aligning with the ever-evolving landscape of search engine algorithms and user expectations. For an in-depth exploration of these strategies, consult resources like The Ultimate Guide to Technical SEO: Everything You Need to Know. by Moz, which covers these advanced techniques in detail. Incorporating these strategies into your SEO practices can give you a competitive edge and help you achieve long-term success in the digital landscape.